AWS Solutions Architect Certification Trainin ...

- 141k Enrolled Learners

- Weekend/Weekday

- Live Class

Amazon S3 is a storage for the Internet. It is designed to make web-scale computing easier for developers. It is synonymous to Google Drive. It’s a simple web service which can be used to store and retrieve any amount of data anywhere from the web.

It gives any developer access to the same highly scalable, reliable, fast, inexpensive data storage infrastructure and adopts the ‘Pay-as-you-go’ model. Any file can be uploaded in the S3 which means that any static content can be shared.

Amazon S3 is an object store while Elastic Block Storage (EBS) is a file system with operating system and hard disk. Performance wise EBS is faster since its over the internal data centre. EBS is zone specific which means that whenever a user makes EBS volume, the data is replicated for the back up within the zone, while in S3 the data is replicated across the regions and multiple zone such that it survives 2 zone availability failure. EBS can be accessed from EC2 and S3 is accessed from the internet based on access policy. S3 is for static content alone and EBS is for a file system.

Suppose the user has a lot of image files in the web application. If the image is uploaded in the web server it would take time for the web server to process and deliver. But if done through S3, it would be faster.

Amazon basically started its cloud service with S3 in 2006. By 2013, there were over 2 Trillion objects in S3.

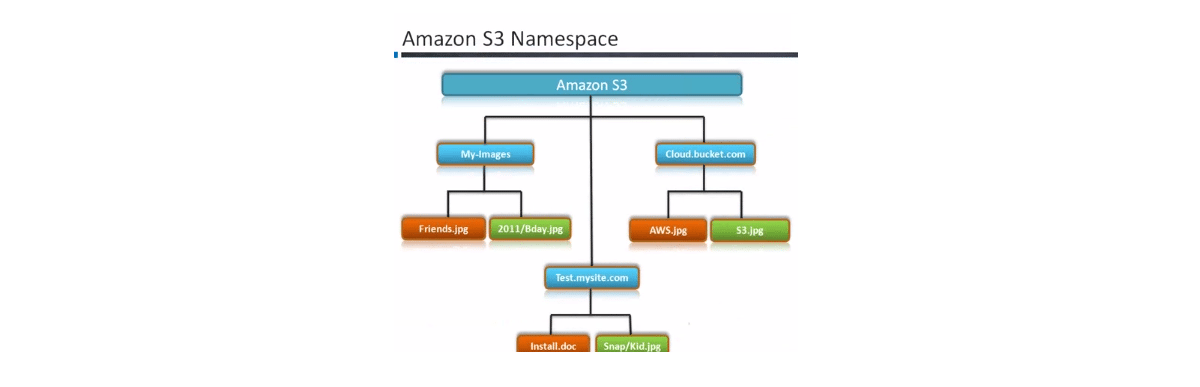

The S3 concept revolves around Bucket and Object. Generally, a file has a metadata, but in this case there are numerous metadata on a file which is why it’s called an object. S3 is an object-based storage. Metadata includes name of the object, size and date. The object cannot be independent but has to be within a bucket. The bucket is a container for an object. There can be hundreds of buckets in each Amazon account and within each bucket there can be hundreds of objects. Each object can vary from a size of 1 Byte to 5 Terabytes.

A key in the S3 concept is a unique identifier for an object within a bucket. Every object in a bucket has exactly one key. Each object would have an Access Control List which helps us know whether the object can be shared across the internet.

The first step would be to sign up for Amazon S3. Then the user can create a bucket, add an object to bucket, view an object, move an object and delete an object/bucket.

The Amazon S3 stores data as objects within buckets. An object consists of a file and optionally any metadata that describes that file. To store an object in Amazon S3, the user can upload the file that he/she wants to store in the bucket.

In a case, if we want to transfer large amount of data, Amazon offers Import/Export which helps to upload/download the data in S3.

The Amazon S3 will have a bucket with objects. The bucket name should be always unique across all Amazon accounts since bucket name is always a part of the URL. Amazon S3 with management console also has the concept of folder. One cannot have a bucket inside a bucket, but inside a bucket the user can have a folder (grouping of multiple objects).

Amazon has two buckets here with the name “My-Images” and “Cloud.bucket.com”. The first bucket has two objects “Friends.jpg” and “2011/Bday.jpg”. In this case the object “2011/Bday.jpg” is folder with an empty object. Any object inside a folder will have a folder name in the URL that will used for grouping purpose inside a bucket.

Whenever the user uploads an object, it will be accessible by the public URL. The URL can be of two types: Bucketname.s3.amazonaws.com/objectname by with virtual-hosted-style or a path style access in the form s3.amazon.aws.com/bucketname/objectname.

Dr. Werner Vogels masterminded the Amazon Web Services. According to Gartner Research, Amazon leads in public IWS market with 80% share. The CAP theorem talks about consistency, availability and partition model. Amazon goes with the concept of the CAP theorem without consistency. If we focus on one element then the other is likely to be compromised. For example, if the user prioritizes on availability and partition model, then consistency is likely to be compromised and so on.

In the eventual consistency, it will take time for object to be eventual which means in Amazon S3 there will be multiple clusters of the storage. Once the user uploads an object, that object would be replicated on clusters before it becomes consistent.

Some of the typical behaviours observed when information about the changes is not immediately replicated across Amazon S3 are:

When preparing for Amazon Exam, the most common question is ‘Which consistency model is followed in which region’. According to the US Standard, the eventual consistency model is adopted, whereas in other regions will be ‘read-after-write’. But for update and delete, the eventual consistency model applies to both regions.

Another point to note is that S3 does not support object locking and all objects are key-based. The advantage of ‘eventual consistency model’ is that it provides lower latency and higher throughput.

Also most NoSQL databases follow the ‘eventual consistency model’.

Bucket policies provide centralized, access control to buckets. The bucket access is given in two ways namely Bucket Policy and Bucket Control list. In Bucket Policy, the user can give customized access to particular user, account and business hours. Access control lists (ACL) can only add (grant) permissions on individual objects and policies.

Each bucket and object in Amazon S3 has an ACL that defines its access control policy. The user can give 100 grants per ACL after that the user can adopt the Bucket Policy.

Libraries for all common languages are catalogued in the resource center. There are multiple developer tools (S3 Sync, S3 Firefox Organizer, AWSZone) and Applications (Zmanda Backup, S3 Backup, Oracle 11g and many more).

Amazon supports both REST and SOAP but SOAP is only supported for HTTP. The S3 APIs are useful for bucket policies as well. It is useful for media sharing, on premise backup and application storage.

Got a question for us? Mention them in the comments section and we will get back to you.

Related Posts:

Related Posts:

| Course Name | Date | |

|---|---|---|

| AWS Solutions Architect Certification Training Course | Class Starts on 28th January,2023 28th January SAT&SUN (Weekend Batch) | View Details |

| AWS Solutions Architect Certification Training Course | Class Starts on 30th January,2023 30th January MON-FRI (Weekday Batch) | View Details |

| AWS Solutions Architect Certification Training Course | Class Starts on 25th February,2023 25th February SAT&SUN (Weekend Batch) | View Details |

REGISTER FOR FREE WEBINAR

REGISTER FOR FREE WEBINAR  Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

edureka.co