Informatica Certification Training Course

- 20k Enrolled Learners

- Weekend

- Live Class

In the previous blog of Top Informatica Interview Questions You Must Prepare For In 2023, we went through all the important questions which are frequently asked in Informatica Interviews. Lets take further deep dive into the Informatica Interview question and understand what are the typical scenario based questions that are asked in the Informatica Interviews.

In the day and age of Big Data, success of any company depends on data-driven decision making and business processes. In such a scenario, data integration is critical to the success formula of any business and mastery of an end-to-end agile data integration platform such as Informatica Powercenter 9.X is sure to put you on the fast-track to career growth. There has never been a better time to get started on a career in ETL and data mining using Informatica PowerCenter Designer.

Go through this Edureka video delivered by our Informatica certification training expert which will explain what does it take to land a job in Informatica.

If you are exploring a job opportunity around Informatica, look no further than this blog to prepare for your interview. Here is an exhaustive list of scenario-based Informatica interview questions that will help you crack your Informatica interview. However, if you have already taken an Informatica interview, or have more questions, we encourage you to add them in the comments tab below.

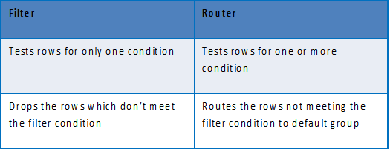

| Source Qualifier Transformation | Filter Transformation |

| 1. It filters rows while reading the data from a source. | 1. It filters rows from within a mapped data. |

| 2. Can filter rows only from relational sources. | 2. Can filter rows from any type of source system. |

| 3. It limits the row sets extracted from a source. | 3. It limits the row set sent to a target. |

| 4. It enhances performance by minimizing the number of rows used in mapping. | 4. It is added close to the source to filter out the unwanted data early and maximize performance. |

| 5. In this, filter condition uses the standard SQL to execute in the database. | 5. It defines a condition using any statement or transformation function to get either TRUE or FALSE. |

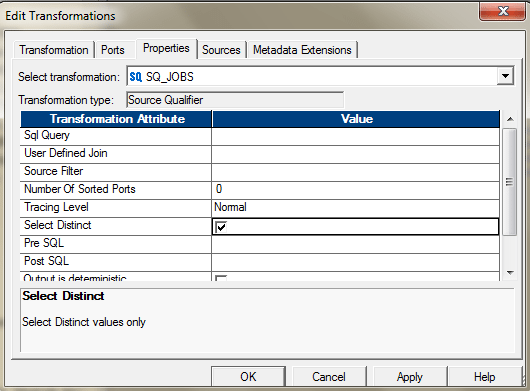

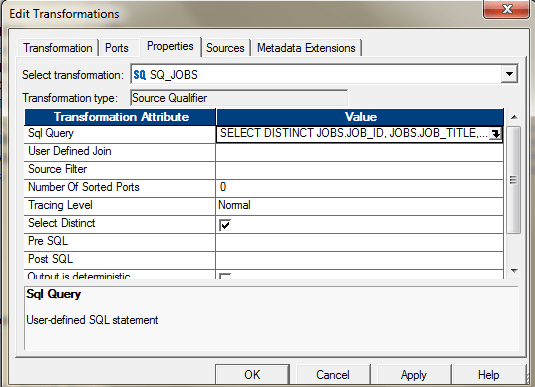

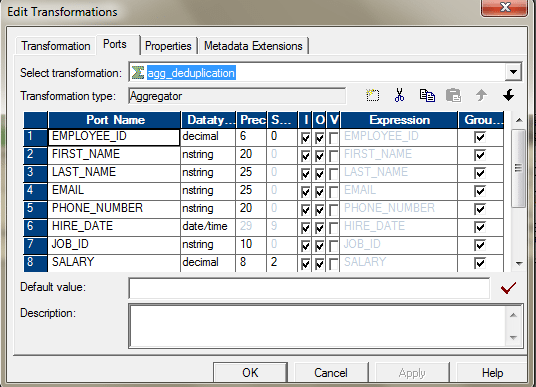

There are several ways to remove duplicates.

Or you can also use the SQL Override to perform the same.

Or you can also use the SQL Override to perform the same.

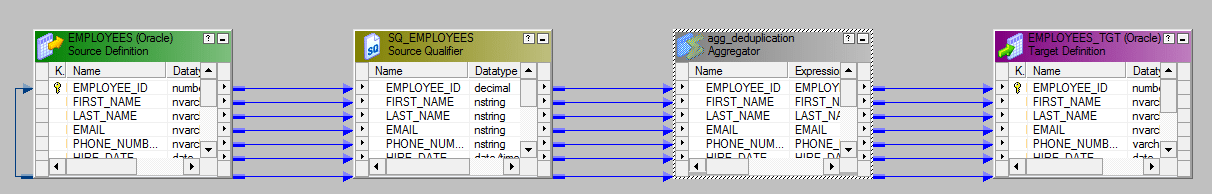

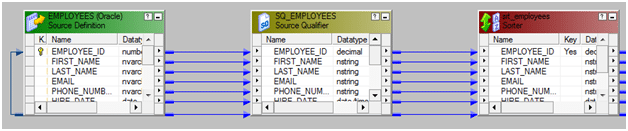

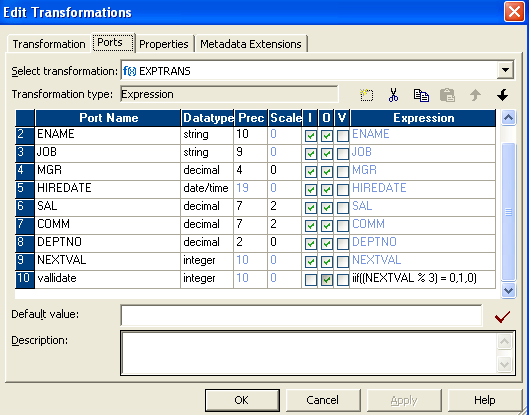

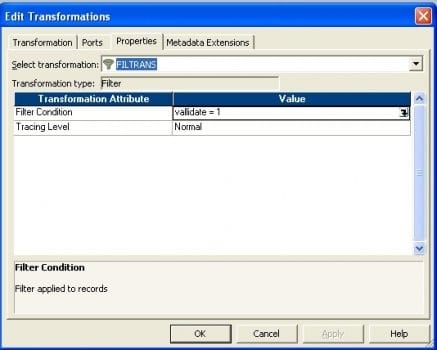

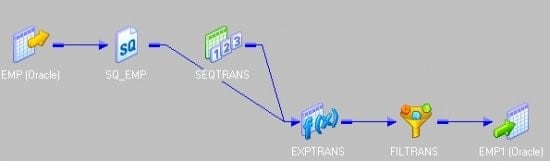

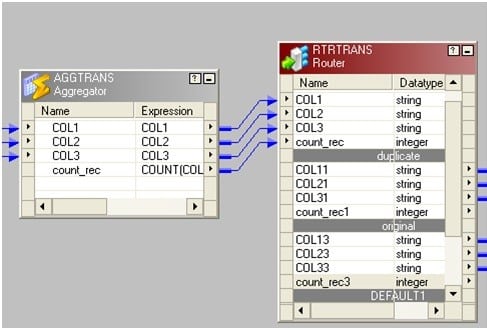

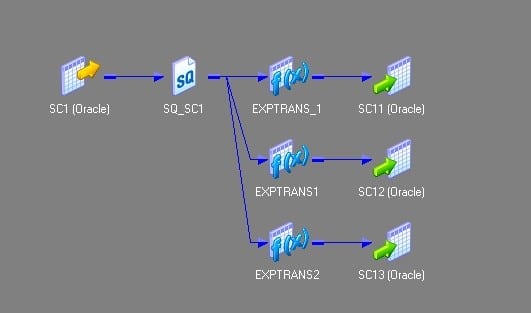

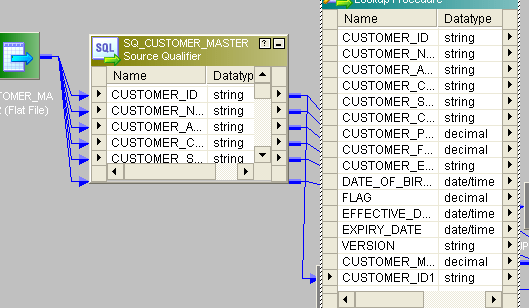

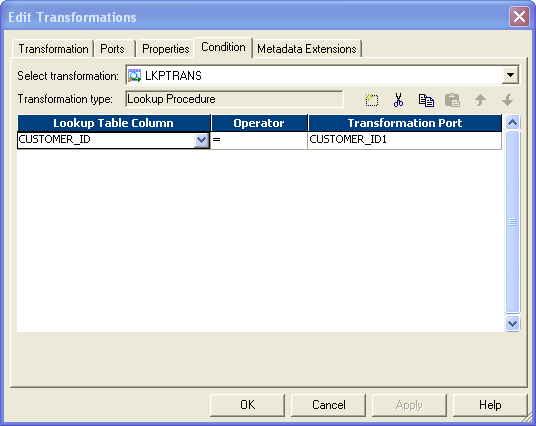

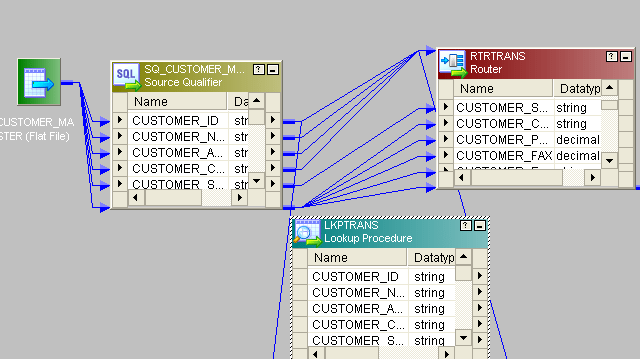

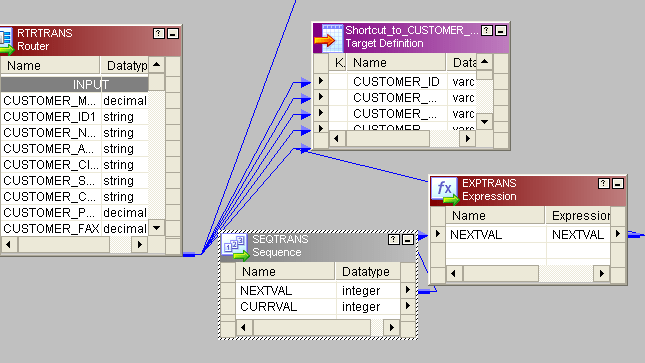

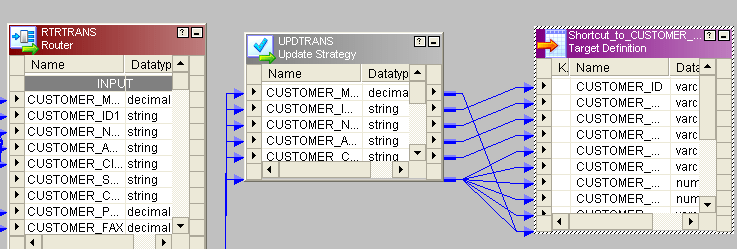

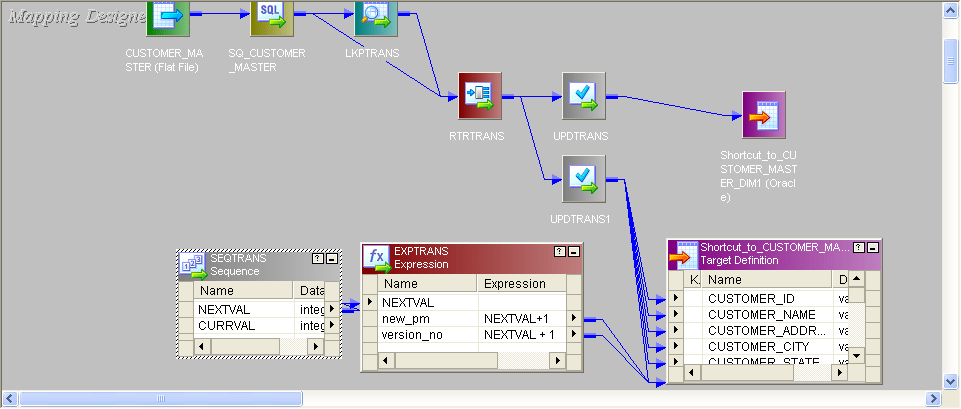

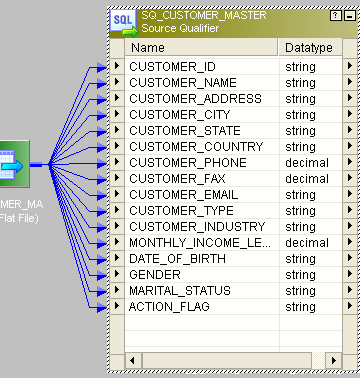

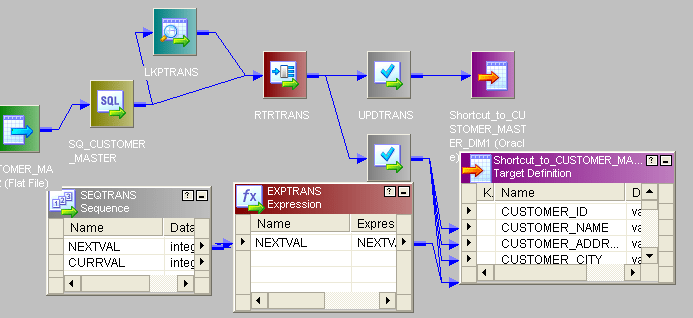

The Mapping will look like this.

The Mapping will look like this.

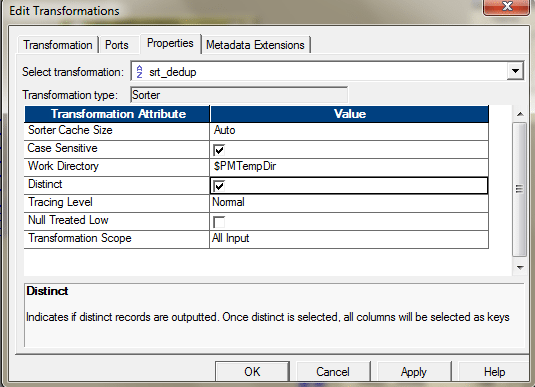

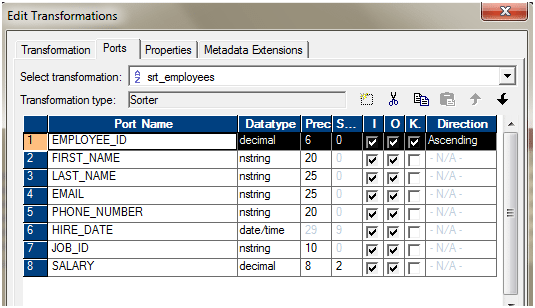

Configure the Sorter as mentioned below.

Configure the Sorter as mentioned below.

v. When you change the property of the Lookup transformation to use the Dynamic Cache, a new port is added to the transformation. NewLookupRow.

The Dynamic Cache can update the cache, as and when it is reading the data.

If the source has duplicate records, you can also use Dynamic Lookup cache and then router to select only the distinct one.

The Source Qualifier can join data originating from the same source database. We can join two or more tables with primary key-foreign key relationships by linking the sources to one Source Qualifier transformation.

If we have a requirement to join the mid-stream or the sources are heterogeneous, then we will have to use the Joiner transformation to join the data.

Below are the differences between lookup and joiner transformation:

Lookup transformation in a mapping is used to look up data in a flat file, relational table, view, or synonym. We can also create a lookup definition from a source qualifier.

We have the following types of Lookup.

Below are the ways in which you can improve the performance of Joiner Transformation.

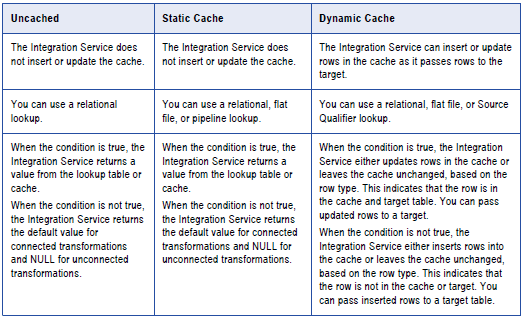

Based on the configurations done at lookup transformation/Session Property level, we can have following types of Lookup Caches.

Based on the types of the Caches configured, we can have two types of caches, Static and Dynamic.

The Integration Service performs differently based on the type of lookup cache that is configured. The following table compares Lookup transformations with an uncached lookup, a static cache, and a dynamic cache:

Persistent Cache

By default, the Lookup caches are deleted post successful completion of the respective sessions but, we can configure to preserve the caches, to reuse it next time.

Shared Cache

We can share the lookup cache between multiple transformations. We can share an unnamed cache between transformations in the same mapping. We can share a named cache between transformations in the same or different mappings.

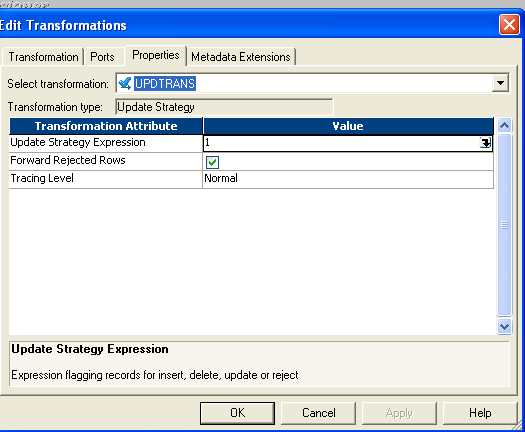

We can use the session configurations to update the records. We can have several options for handling database operations such as insert, update, delete.

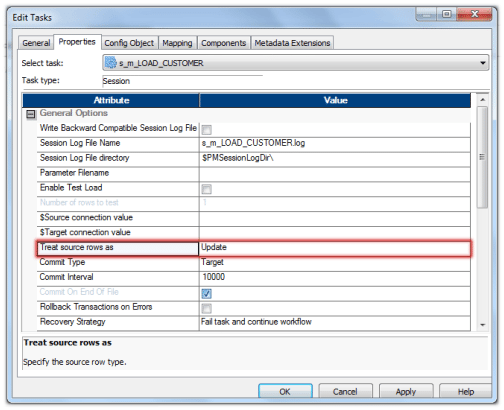

During session configuration, you can select a single database operation for all rows using the Treat Source Rows As setting from the ‘Properties’ tab of the session.

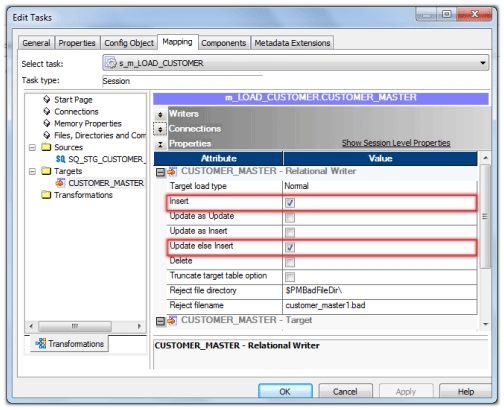

Once determined how to treat all rows in the session, we can also set options for individual rows, which gives additional control over how each rows behaves. We need to define these options in the Transformations view on mapping tab of the session properties.

Steps:

These options will make the session as Update and Insert records without using Update Strategy in Target Table.

When we need to update a huge table with few records and less inserts, we can use this solution to improve the session performance.

The solutions for such situations is not to use Lookup Transformation and Update Strategy to insert and update records.

The Lookup Transformation may not perform better as the lookup table size increases and it also degrades the performance.

Like IIF (IISNULL (CUST_DIM_KEY), DD_INSERT,

IIF (SRC_CUST_ID! =TGT_CUST_ID), DD_UPDATE, DD_REJECT))

Here we are checking if CUST_DIM_KEY is not null then if SRC_CUST_ID is equal to the TGT_CUST_ID. If they are equal, then we do not take any action on those rows; they are getting rejected.

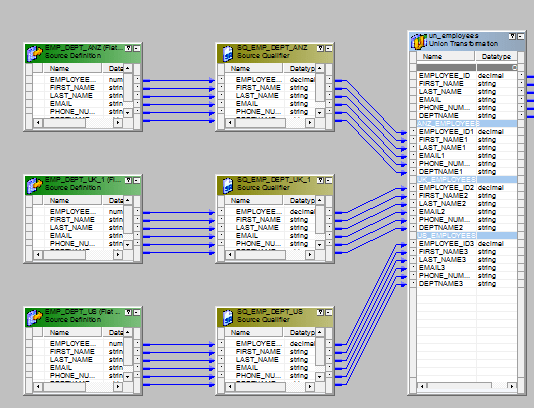

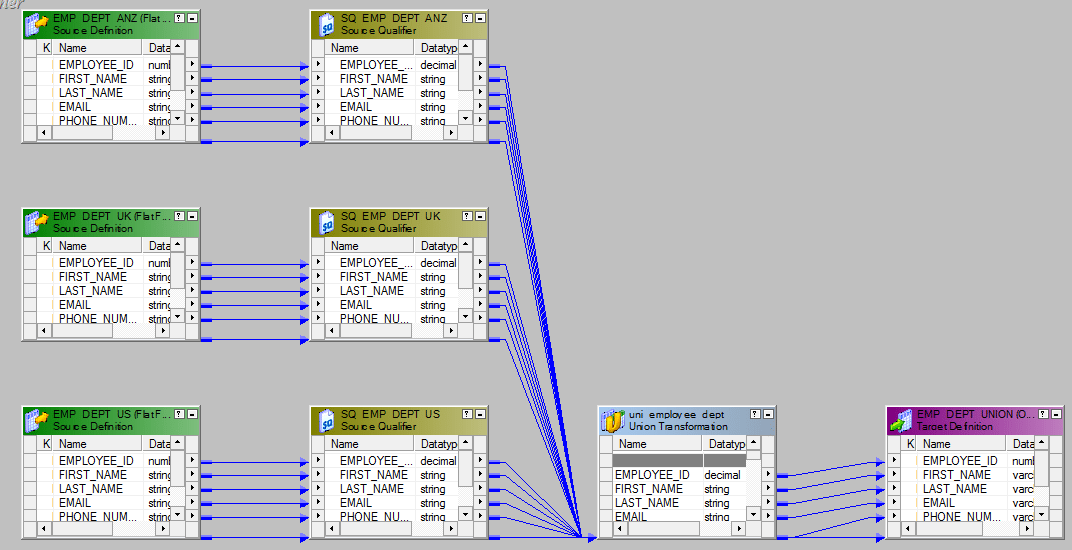

Union Transformation

In union transformation, though the total number of rows passing into the Union is the same as the total number of rows passing out of it, the positions of the rows are not preserved, i.e. row number 1 from input stream 1 might not be row number 1 in the output stream. Union does not even guarantee that the output is repeatable. Hence it is an Active Transformation.

Let us say, this is our source

| Cust_id | Cust_name | Cust_amount | Cust_Place | Cust_zip |

| 101 | AD | 160 | KL | 700098 |

| 102 | BG | 170 | KJ | 560078 |

| NULL | NULL | 180 | KH | 780098 |

The target structure is also the same but, we have got two tables, one which will contain the NULL records and one which will contain non NULL records.

We can design the mapping as mentioned below.

SQ –> EXP –> RTR –> TGT_NULL/TGT_NOT_NULL

EXP – Expression transformation create an output port

O_FLAG= IIF ( (ISNULL(cust_id) OR ISNULL(cust_name) OR ISNULL(cust_amount) OR ISNULL(cust _place) OR ISNULL(cust_zip)), ‘NULL’,’NNULL’)

** Assuming you need to redirect in case any of value is null

OR

O_FLAG= IIF ( (ISNULL(cust_name) AND ISNULL(cust_no) AND ISNULL(cust_amount) AND ISNULL(cust _place) AND ISNULL(cust_zip)), ‘NULL’,’NNULL’)

** Assuming you need to redirect in case all of value is null

RTR – Router transformation two groups

Group 1 connected to TGT_NULL ( Expression O_FLAG=’NULL’)

Group 2 connected to TGT_NOT_NULL ( Expression O_FLAG=’NNULL’)

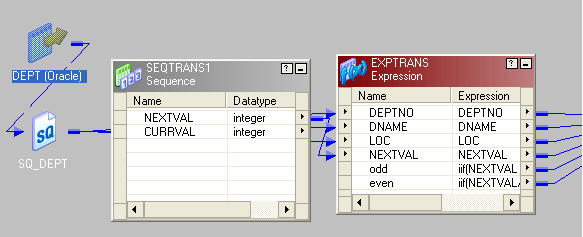

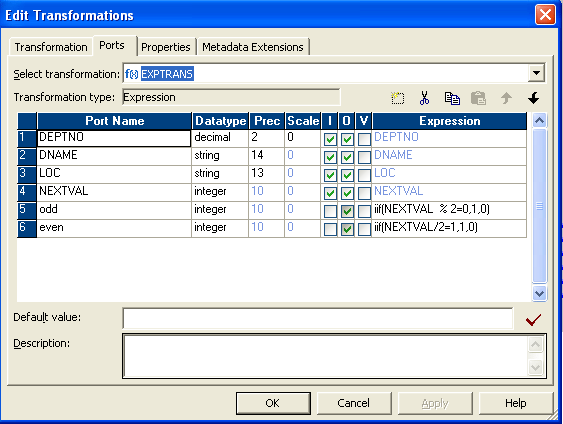

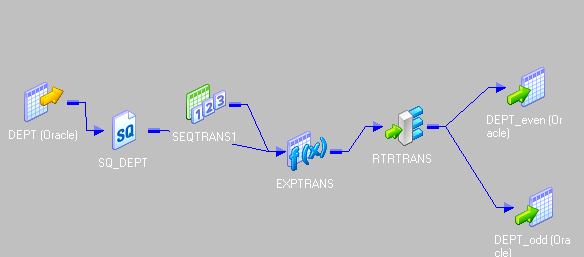

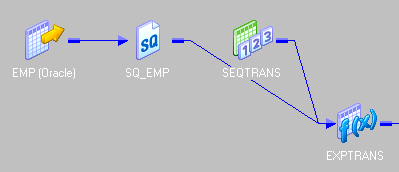

The idea is to add a sequence number to the records and then divide the record number by 2. If it is divisible, then move it to one target and if not then move it to other target.

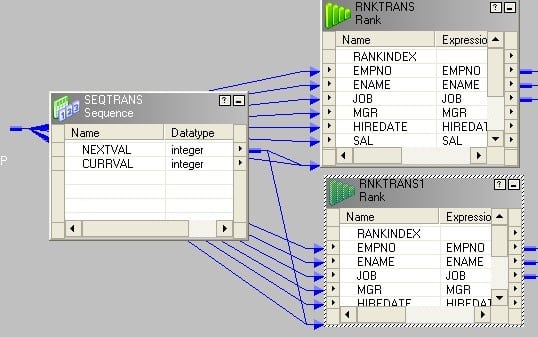

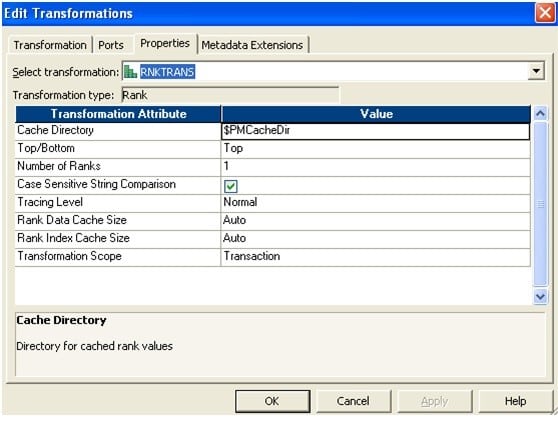

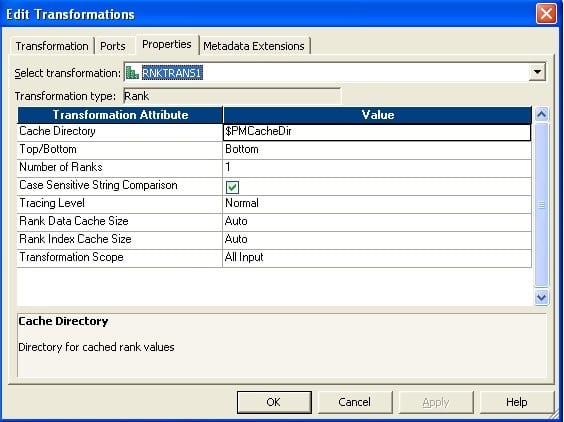

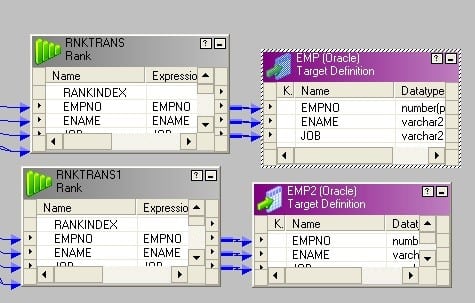

The idea behind this is to add a sequence number to the records and then take the Top 1 rank and Bottom 1 Rank from the records.

This is applicable for any n= 2, 3,4,5,6… For our example, n = 5. We can apply the same logic for any n.

The idea behind this is to add a sequence number to the records and divide the sequence number by n (for this case, it is 5). If completely divisible, i.e. no remainder, then send them to one target else, send them to the other one.

Source Table:

| COL1 | COL2 | COL3 |

| a | b | c |

| x | y | z |

| a | b | c |

| r | f | u |

| a | b | c |

| v | f | r |

| v | f | r |

Target Table 1: Table containing all the unique rows

| COL1 | COL2 | COL3 |

| a | b | c |

| x | y | z |

| r | f | u |

| v | f | r |

Target Table 2: Table containing all the duplicate rows

| COL1 | COL2 | COL3 |

| a | b | c |

| a | b | c |

| v | f | r |

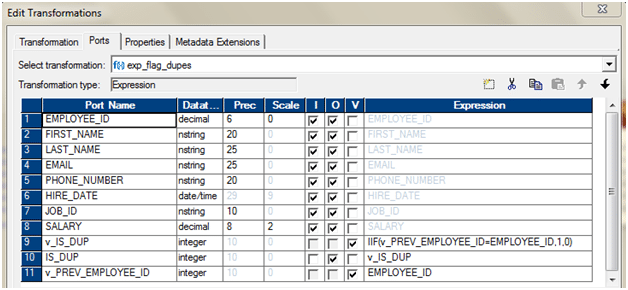

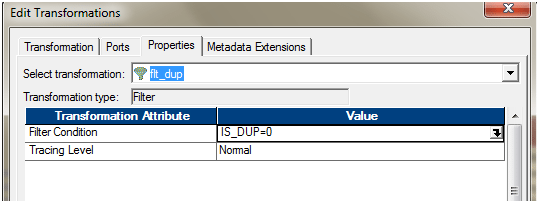

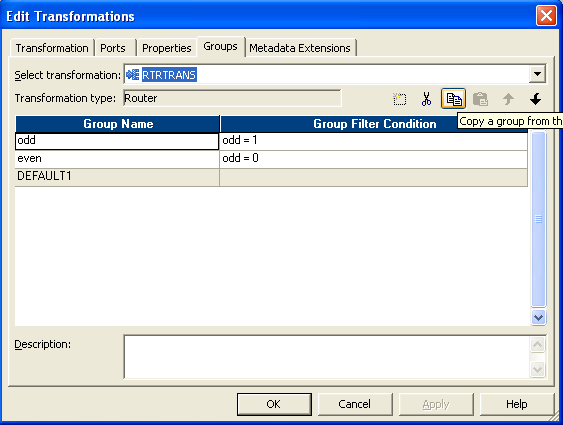

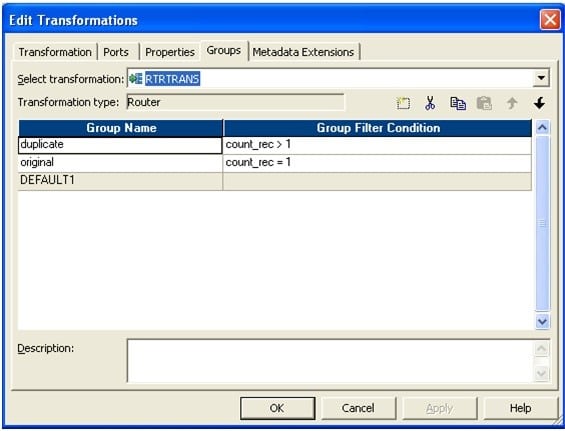

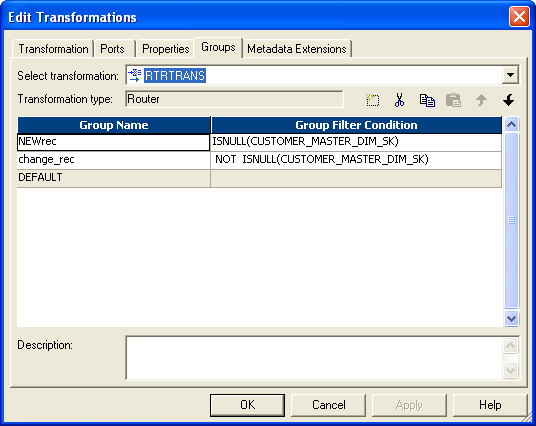

The picture below depicts the group name and the filter conditions.

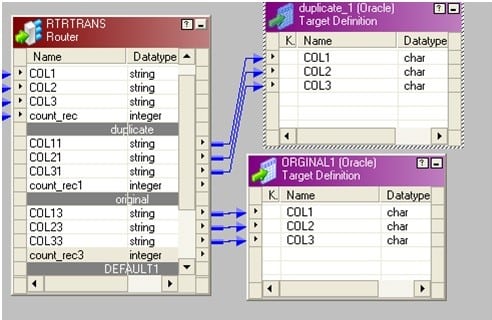

The picture below depicts the group name and the filter conditions. Connect two groups to corresponding target tables.

Connect two groups to corresponding target tables.

SQL query:

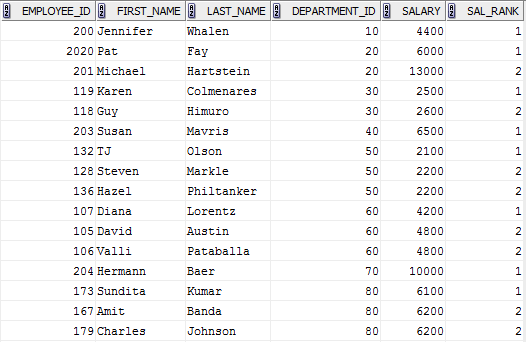

You can use this kind of query to fetch more than 1 Max salary for each department.

SELECT * FROM (

SELECT EMPLOYEE_ID, FIRST_NAME, LAST_NAME, DEPARTMENT_ID, SALARY, RANK () OVER (PARTITION BY DEPARTMENT_ID ORDER BY SALARY) SAL_RANK FROM EMPLOYEES)

WHERE SAL_RANK <= 2

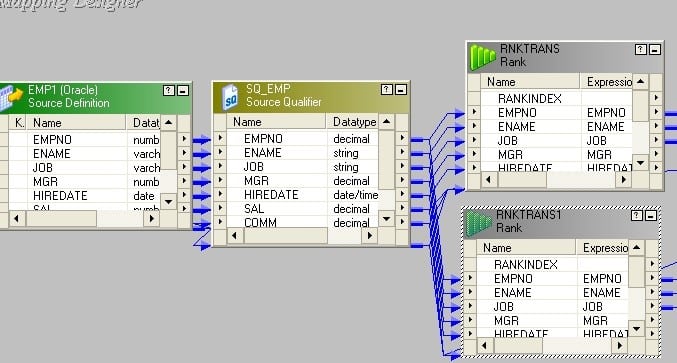

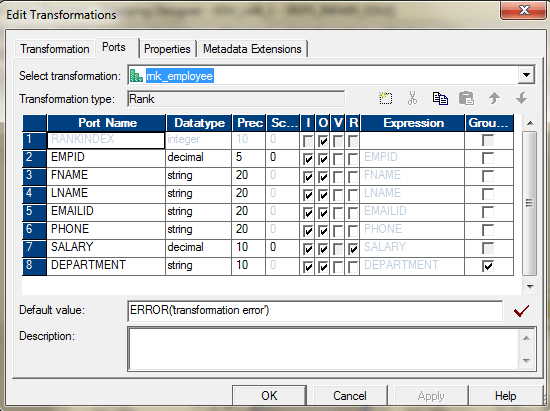

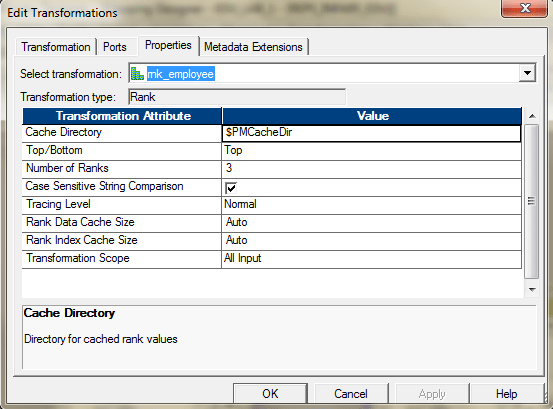

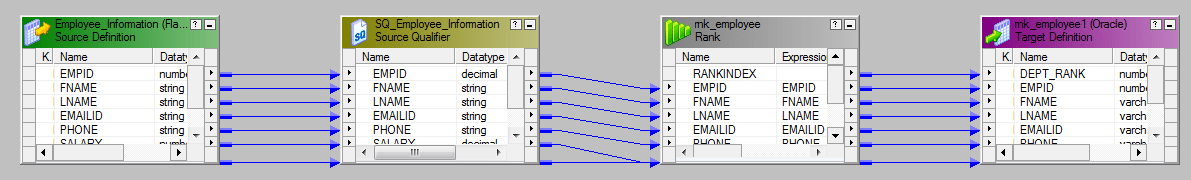

Informatica Approach:

We can use the Rank transformation to achieve this.

Use Department_ID as the group key.

In the properties tab, select Top, 3.

The entire mapping should look like this.

This will give us the top 3 employees earning maximum salary in their respective departments.

We can use Normalizer transformation for this. If we do not want to use Normalizer, then there is one alternate way for this.

We have a source table containing 3 columns: Col1, Col2 and Col3. There is only 1 row in the table as follows:

| Col1 | Col2 | Col3 |

| a | b | C |

There is target table contains only 1 column Col. Design a mapping so that the target table contains 3 rows as follows:

Col |

| a |

| b |

| c |

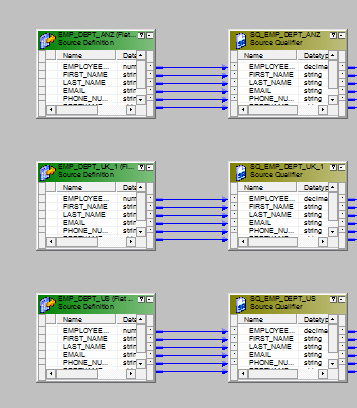

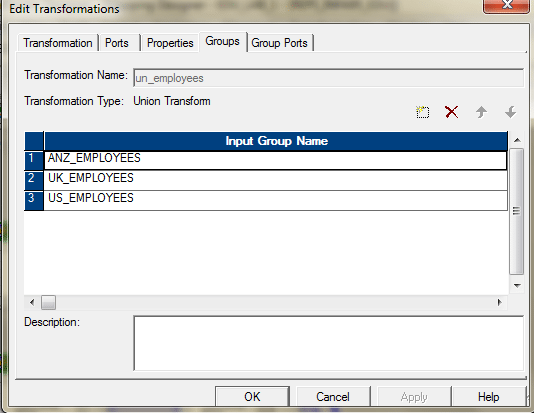

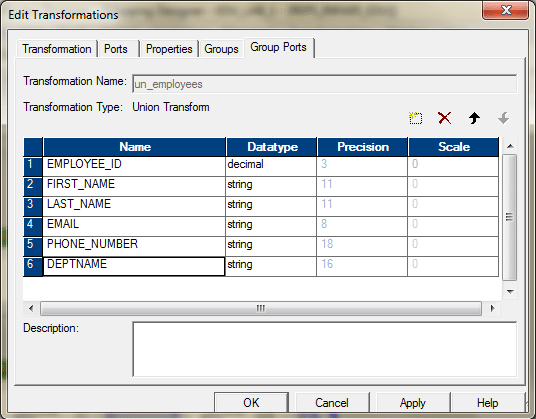

We will have to use the Union Transformation here. Union Transformation is a multiple input group transformation and it has only one output group.

Group Ports Tab.

Group Ports Tab.

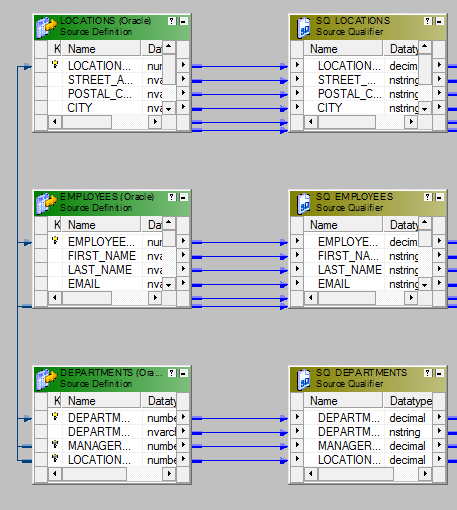

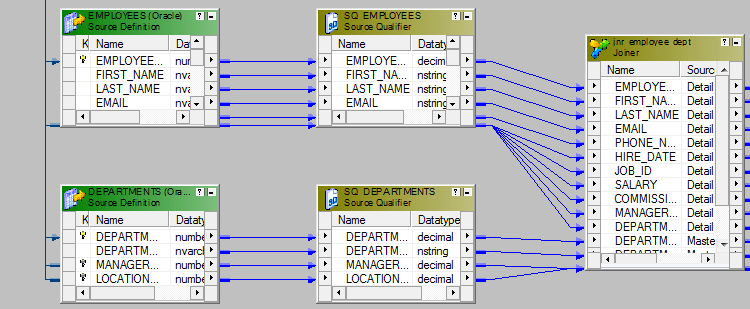

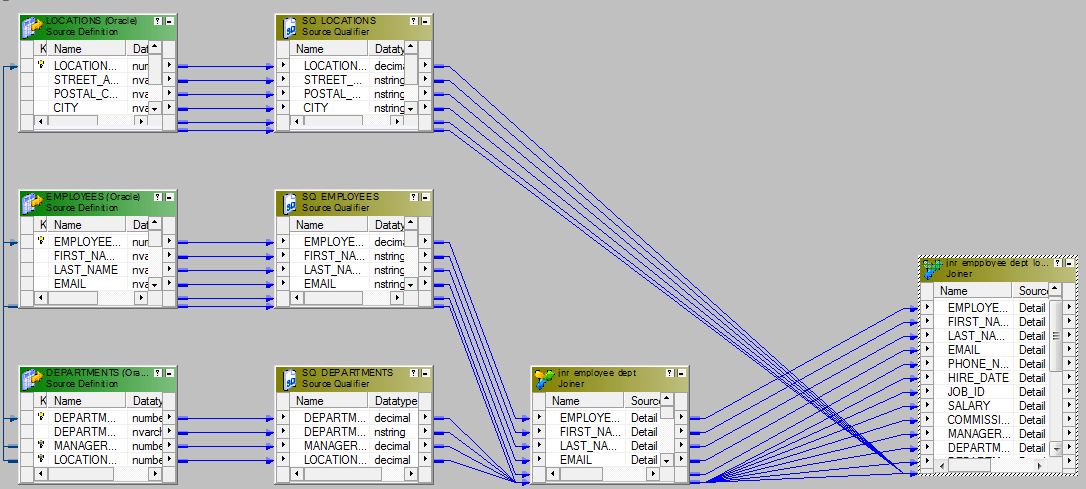

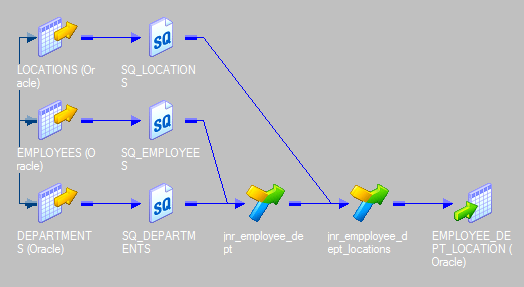

We cannot join more than two sources using a single joiner. To join three sources, we need to have two joiner transformations.

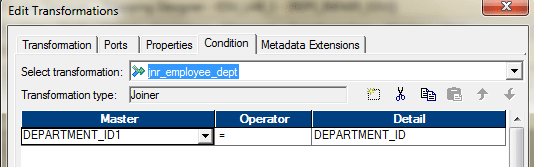

Let’s say, we want to join three tables – Employees, Departments and Locations – using Joiner. We will need two joiners. Joiner-1 will join, Employees and Departments and Joiner-2 will join, the output from the Joiner-1 and Locations table.

Here are the steps.

There are three different data models that exist.

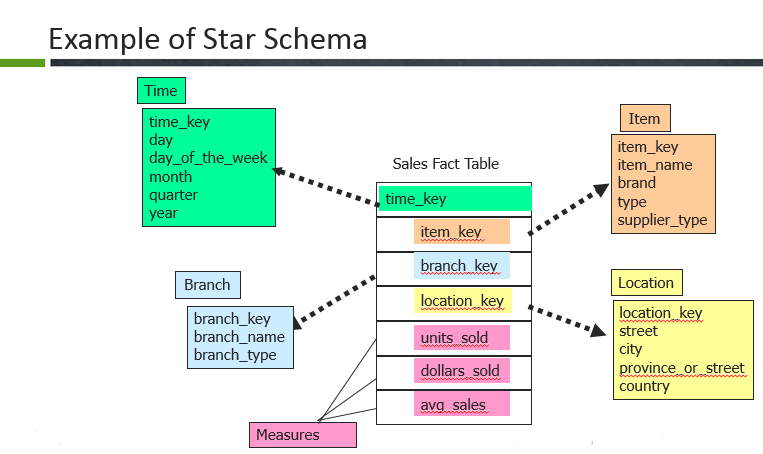

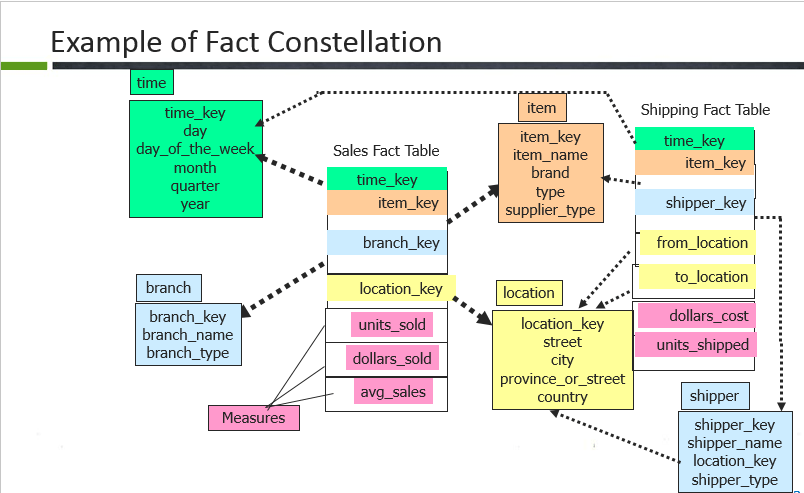

Here, the Sales fact table is a fact table and the surrogate keys of each dimension table are referred here through foreign keys. Example: time key, item key, branch key, location key. The fact table is surrounded by the dimension tables such as Branch, Location, Time and item. In the fact table there are dimension keys such as time_key, item_key, branch_key and location_keys and measures are untis_sold, dollars sold and average sales.Usually, fact table consists of more rows compared to dimensions because it contains all the primary keys of the dimension along with its own measures.

Here, the Sales fact table is a fact table and the surrogate keys of each dimension table are referred here through foreign keys. Example: time key, item key, branch key, location key. The fact table is surrounded by the dimension tables such as Branch, Location, Time and item. In the fact table there are dimension keys such as time_key, item_key, branch_key and location_keys and measures are untis_sold, dollars sold and average sales.Usually, fact table consists of more rows compared to dimensions because it contains all the primary keys of the dimension along with its own measures.

Dimension table is the one that describes business entities of an enterprise, represented as hierarchical, categorical information such as time, departments, locations, products etc.

Types of dimensions in data warehouse

A dimension table consists of the attributes about the facts. Dimensions store the textual descriptions of the business. Without the dimensions, we cannot measure the facts. The different types of dimension tables are explained in detail below.

The centralized table in the star schema is called the Fact table. A Fact table typically contains two types of columns. Columns which contains the measure called facts and columns, which are foreign keys to the dimension tables. The Primary key of the fact table is usually the composite key that is made up of the foreign keys of the dimension tables.

Types of Facts in Data Warehouse

A fact table is the one which consists of the measurements, metrics or facts of business process. These measurable facts are used to know the business value and to forecast the future business. The different types of facts are explained in detail below.

Factless Fact Table:

In the real world, it is possible to have a fact table that contains no measures or facts. These tables are called “Factless Fact tables”.

E.g: A fact table which has only product key and date key is a factless fact. There are no measures in this table. But still you can get the number products sold over a period of time.

A fact table that contains aggregated facts are often called summary tables.

SCD Type1 Mapping

The SCD Type 1 methodology overwrites old data with new data, and therefore does not need to track historical data.

SCD Type2 Mapping

In Type 2 Slowly Changing Dimension, if one new record is added to the existing table with a new information then, both the original and the new record will be presented having new records with its own primary key.

SCD Type3 Mapping

In SCD Type3, there should be two columns added to identifying a single attribute. It stores one time historical data with current data.

Any Informatica Transformation created in the Transformation Developer or a non-reusable promoted to reusable transformation from the mapping designer which can be used in multiple mappings is known as Reusable Transformation.

When we add a reusable transformation to a mapping, we actually add an instance of the transformation. Since the instance of a reusable transformation is a pointer to that transformation, when we change the transformation in the Transformation Developer, its instances reflect these changes.

A Mapplet is a reusable object created in the Mapplet Designer which contains a set of transformations and lets us reuse the transformation logic in multiple mappings.

A Mapplet can contain as many transformations as we need. Like a reusable transformation when we use a mapplet in a mapping, we use an instance of the mapplet and any change made to the mapplet is inherited by all instances of the mapplet.

Target Load Order:

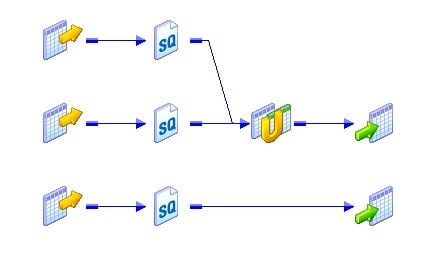

Target load order (or) Target load plan is used to specify the order in which the integration service loads the targets. You can specify a target load order based on the source qualifier transformations in a mapping. If you have multiple source qualifier transformations connected to multiple targets, you can specify the order in which the integration service loads the data into the targets.

Target Load Order Group:

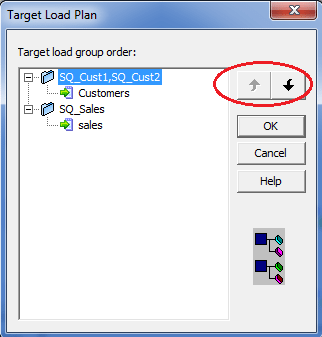

A target load order group is the collection of source qualifiers, transformations and targets linked in a mapping. The integration service reads the target load order group concurrently and it processes the target load order group sequentially. The following figure shows the two target load order groups in a single mapping.

Use of Target Load Order:

Target load order will be useful when the data of one target depends on the data of another target. For example, the employees table data depends on the departments data because of the primary-key and foreign-key relationship. So, the departments table should be loaded first and then the employees table. Target load order is useful when you want to maintain referential integrity when inserting, deleting or updating tables that have the primary key and foreign key constraints.

Target Load Order Setting:

You can set the target load order or plan in the mapping designer. Follow the below steps to configure the target load order:

1. Login to the PowerCenter designer and create a mapping that contains multiple target load order groups.

2. Click on the Mappings in the toolbar and then on Target Load Plan. The following dialog box will pop up listing all the source qualifier transformations in the mapping and the targets that receive data from each source qualifier.

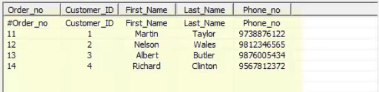

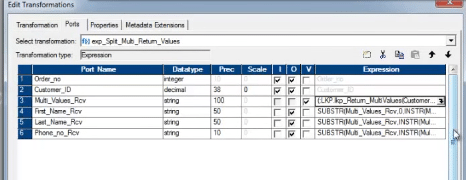

We can only return one port from the Unconnected Lookup transformation. As the Unconnected lookup is called from another transformation, we cannot return multiple columns using Unconnected Lookup transformation.

However, there is a trick. We can use the SQL override and concatenate the multiple columns, those we need to return. When we can the lookup from another transformation, we need to separate the columns again using substring.

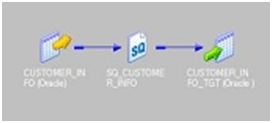

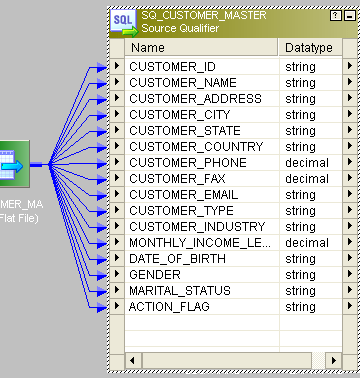

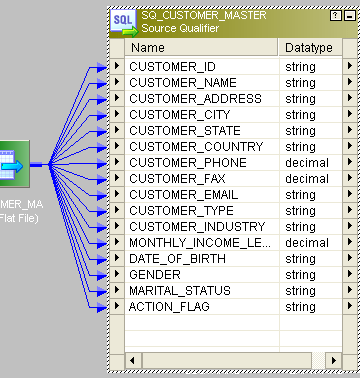

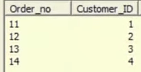

As a scenario, we are taking one source, containing the Customer_id and Order_id columns.

Source:

We need to look up the Customer_master table, which holds the Customer information, like Name, Phone etc.

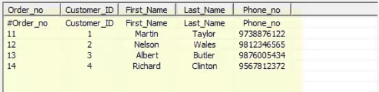

The target should look like this:

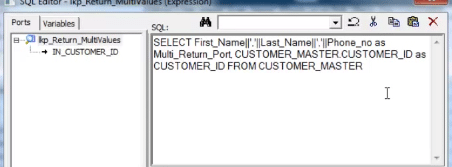

Let’s have a look at the Unconnected Lookup.

The SQL Override, with concatenated port/column:

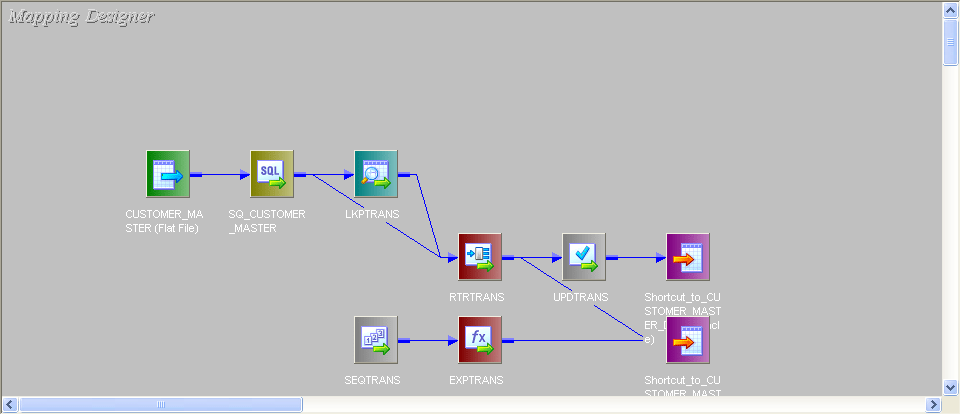

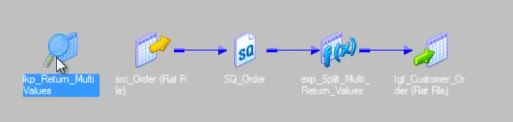

Entire mapping will look like this.

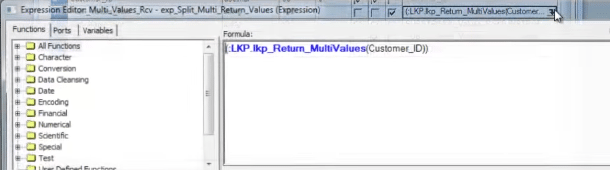

We are calling the unconnected lookup from one expression transformation.

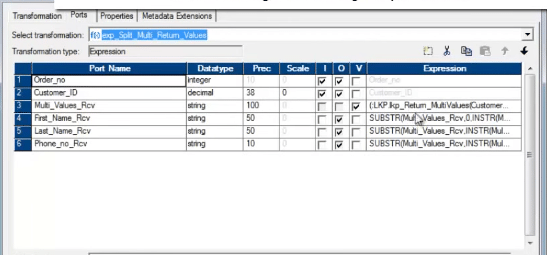

Below is the screen shot of the expression transformation.

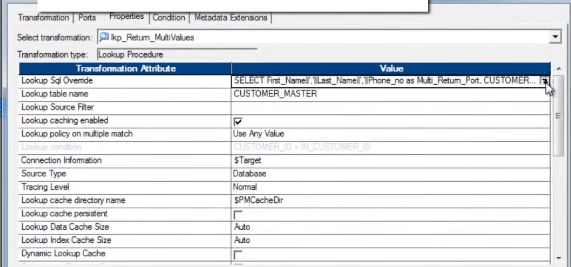

After execution of the above mapping, below is the target, that is populated.

I am pretty confident that after going through both these Informatica Interview Questions blog, you will be fully prepared to take Informatica Interview without any hiccups. If you wish to deep dive into Informatica with use cases, I will recommend you to go through our website and enrol at the earliest.

The Edureka course on Informatica helps you master ETL and data mining using Informatica PowerCenter Designer. The course aims to enhance your career path in data mining through live projects and interactive tutorials by industry experts.

Got a question for us? Please mention it in the comments section and we will get back to you.

Related Posts:

Get Started with Informatica PowerCenter 9.X Developer & Admin

Informatica Interview Questions for Beginners

Career Progression with Informatica: All You Need to Know

| Course Name | Date | |

|---|---|---|

| Informatica Certification Training Course | Class Starts on 25th February,2023 25th February SAT&SUN (Weekend Batch) | View Details |

| Informatica Certification Training Course | Class Starts on 15th April,2023 15th April SAT&SUN (Weekend Batch) | View Details |

REGISTER FOR FREE WEBINAR

REGISTER FOR FREE WEBINAR  Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

edureka.co

Nice Questions…Please Visit http://www.itnirvanas.com/2018/02/top-informatica-interview-questions.html for more questions

I have created mapping parameter $$Runnumber, and in mapping i have there is logic in expression transformation.

IIF($$Runnumber=1, then some logic and IIF($$Runnumber=2 then some logic.

So, how to write parameter file details for run numbers. based on run number mapping logic will load the data to target.

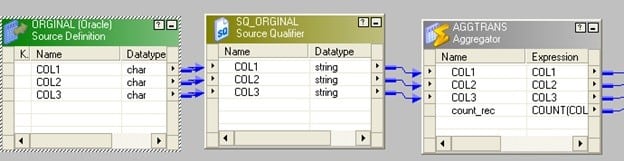

I believe for the Scenario-14, as per the solution given one table will contain all the duplicate records and another table will contain only unique records as below as we are using aggregator transformation.

Duplicate:

COL1 COL2 COL3

a b c

x y z

v f r

Original:

COL1 COL2 COL3

r f u

If my understanding is correct can you please provide the correct answer.

Worth reading blog..

We are happy you found our blog useful Charan! Do subscribe and stay connected with us. Thanks :)

Very good questions with nice explanations for beginners.

Hey Nitin, thanks for the wonderful feedback! You might like this blog too: https://www.edureka.co/blog/informatica-tutorial. Also, please subscribe to our blog to stay posted on the upcoming Informatica posts. Cheers!

Thanks for posting one more useful blog. can you provide such blogs for Talend also ?

Hey Nitin, here are a few Talend blogs that you might find relevant: https://www.edureka.co/blog?s=talend. You can also check out our Talend tutorials on YouTube: https://www.youtube.com/playlist?list=PL9ooVrP1hQOGPJgFdMaUeNSktk70k3yJP. Hope this helps. Cheers!