Azure Data Engineer Associate Certification C ...

- 2k Enrolled Learners

- Weekend

- Live Class

This blog post talks about important Hadoop configuration files and provides examples on the same. A thorough understanding of this topic is crucial for obtaining your Big Data Architect Masters Certification and performing all its projects. Let’s start with the Master-Slave concepts that are essential to understand Hadoop’s configuration files.

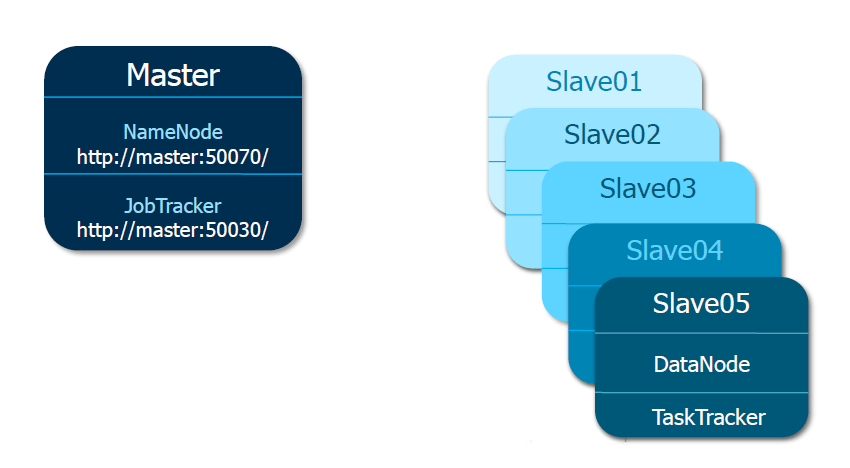

Slaves contain a list of hosts, one per line, that are needed to host DataNode and TaskTracker servers. The Masters contain a list of hosts, one per line, that are required to host secondary NameNode servers. The Masters file informs about the Secondary NameNode location to Hadoop daemon. The ‘Masters’ file at Master server contains a hostname, Secondary Name Node servers.

The Hadoop-env.sh, core-ite.xml, hdfs-site.xml, mapred-site.xml, Masters and Slaves are all available under ‘conf’ directory of Hadoop installation directory.

The core-site.xml file informs Hadoop daemon where NameNode runs in the cluster. It contains the configuration settings for Hadoop Core such as I/O settings that are common to HDFS and MapReduce.

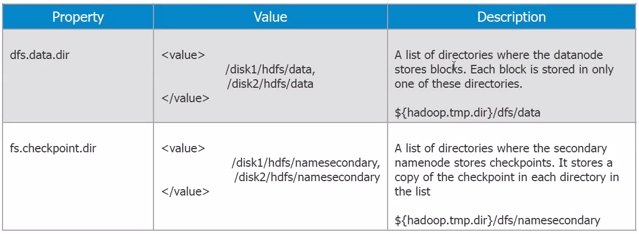

The hdfs-site.xml file contains the configuration settings for HDFS daemons; the NameNode, the Secondary NameNode, and the DataNodes. Here, we can configure hdfs-site.xml to specify default block replication and permission checking on HDFS. The actual number of replications can also be specified when the file is created. The default is used if replication is not specified in create time. The best way to become a Data Engineer si by getting the Azure Data Engineering Course in Atlanta.

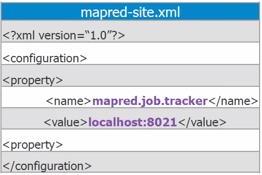

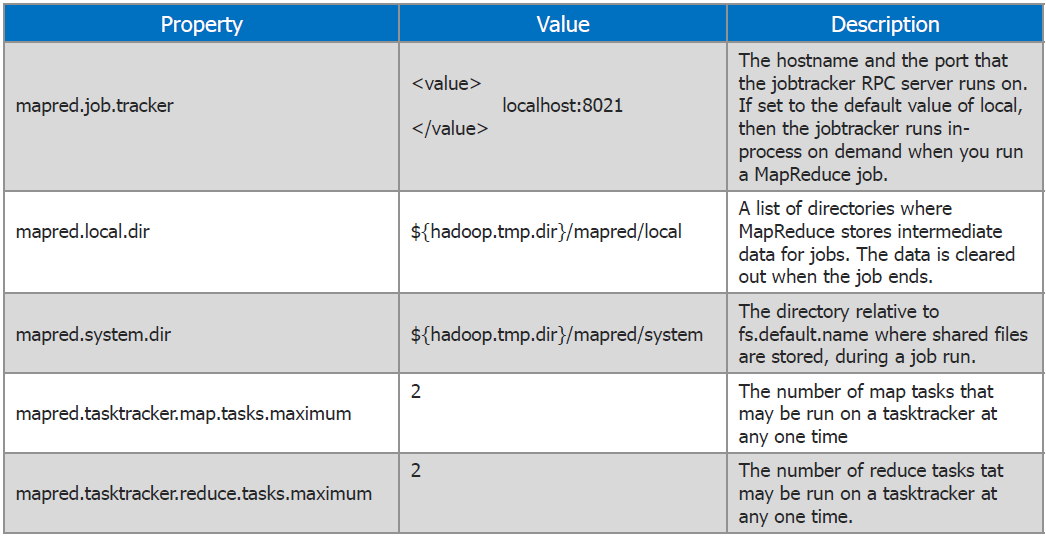

The mapred-site.xml file contains the configuration settings for MapReduce daemons; the job tracker and the task-trackers.

The following links provide more details on configuration files:

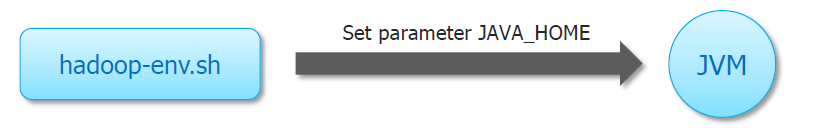

This file offers a way to provide customer parameters for each of the servers. Hadoop-env.sh is sourced by the entire Hadoop core scripts provided in the ‘conf/’ directory of the installation.

Here are some examples of environment variables than can be specified:

exportHADOOP_DATANODE_HEAPSIZE=”128″

exportHADOOP_TASKTRACKER_HEAPSIZE=”512″

The ‘hadoop-metrics.properties’ file controls the reporting and the default condition is set as not to report.

The Hadoop core uses Shell (SSH) to launch the server processes on the slave nodes and that requires password-less SSH connection between the Master and all the Slaves and secondary machines.

Facebook uses Hadoop to store copies of internal log and dimension data sources and use it as a source for reporting, analytics and machine learning. Currently, Facebook has two major clusters: A 1100-machine cluster with 800 cores and about 12 PB raw storage. Another one is a 300 machine cluster with 2,400 cores and about 3 PB raw storage. Each of the commodity node has 8 cores and 12 TB storage.

Facebook uses streaming and Java API a lot and have used Hive to build a higher-level data warehousing framework. They have also developed a FUSE application over HDFS.

You can get a better understanding with the Azure Data Engineering course.

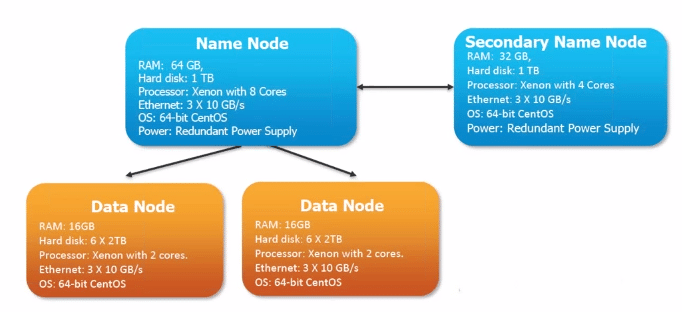

The above image clearly explains the configuration of each nodes. NameNode has high memory requirement and will have a lot of RAM and does not require a lot of memory on hard disk. The memory requirement for a secondary NameNode is not as high as the primary NameNode. Each DataNode requires 16 GB of memory and are high on hard disk as they are supposed to store data. They have multiple drives as well. Learn more from this Big Data Course about Hadoop Clusters, HDFS, and other important topics to become a Hadoop professional.

Got a question for us? Please mention them in the comments section and we will get back to you.

Related Posts:

Related Posts:

| Course Name | Date | |

|---|---|---|

| Big Data Hadoop Certification Training Course | Class Starts on 11th February,2023 11th February SAT&SUN (Weekend Batch) | View Details |

| Big Data Hadoop Certification Training Course | Class Starts on 8th April,2023 8th April SAT&SUN (Weekend Batch) | View Details |

REGISTER FOR FREE WEBINAR

REGISTER FOR FREE WEBINAR  Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

edureka.co

Recent study reveals that approximately 75% people are occupied into internet jobs. Online world-wide is becoming bigger and even better and generating a great number of make money online opportunities. Work from home on line jobs are trending and developing people’s day-to-day lives. Exactly why it is really well known? Because it enables you to do the job from anywhere and any time. One gets much more time to dedicate with your family and can plan out trips for vacations. Men and women are making great income of $21000 each week by utilizing the efficient and smart techniques. Carrying out right work in a right direction will definitely lead us in direction of becoming successful. You can begin to earn from the first day after you look at our web-site. >>>>> CONTACT US TODAY