Azure Data Engineer Associate Certification C ...

- 2k Enrolled Learners

- Weekend

- Live Class

As we mentioned in our Hadoop Ecosytem blog, HBase is an essential part of our Hadoop ecosystem. So now, I would like to take you through HBase tutorial, where I will introduce you to Apache HBase, and then, we will go through the Facebook Messenger case-study. We are going to cover following topics in this HBase tutorial blog:

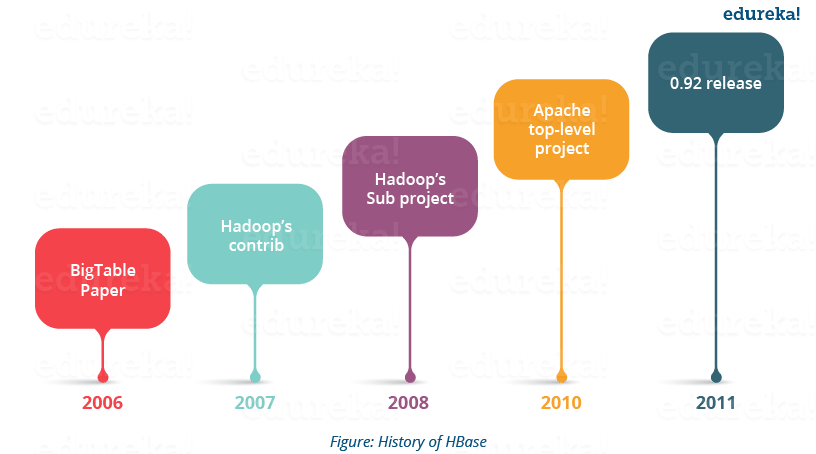

Let us start with the history of HBase and know how HBase has evolved over a period of time.

After knowing about the history of Apache HBase, you would be curious to know what is Apache HBase? Let us move further and take a look.

HBase is an open source, multidimensional, distributed, scalable and a NoSQL database written in Java. HBase runs on top of HDFS (Hadoop Distributed File System) and provides BigTable like capabilities to Hadoop. It is designed to provide a fault tolerant way of storing large collection of sparse data sets.

Since, HBase achieves high throughput and low latency by providing faster Read/Write Access on huge data sets. Therefore, HBase is the choice for the applications which require fast & random access to large amount of data.

It provides compression, in-memory operations and Bloom filters (data structure which tells whether a value is present in a set or not) to fulfill the requirement of fast and random read-writes.

Let’s understand it through an example: A jet engine generates various types of data from different sensors like pressure sensor, temperature sensor, speed sensor, etc. which indicates the health of the engine. This is very useful to understand the problems and status of the flight. Continuous Engine Operations generates 500 GB data per flight and there are 300 thousand flights per day approximately. So, Engine Analytics applied to such data in near real time can be used to proactively diagnose problems and reduce unplanned downtime. This requires a distributed environment to store large amount of data with fast random reads and writes for real time processing. Here, HBase comes for the rescue. I will talk about HBase Read and Write in detail in my next blog on HBase Architecture.

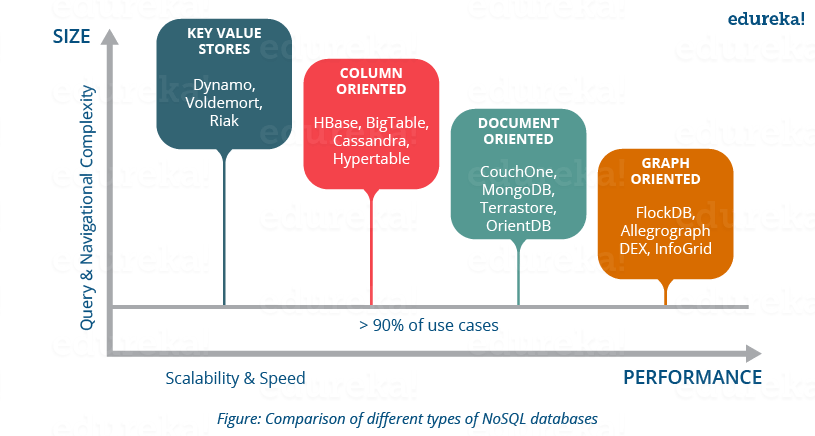

As we know, HBase is a NoSQL database. So, before understanding more about HBase, lets first discuss about the NoSQL databases and its types.

NoSQL means Not only SQL. NoSQL databases is modeled in a way that it can represent data other than tabular formats, unkile relational databases. It uses different formats to represent data in databases and thus, there are different types of NoSQL databases based on their representation format. Most of NoSQL databases leverages availability and speed over consistency. Now, let us move ahead and understand about the different types of NoSQL databases and their representation formats.

It is a schema-less database which contains keys and values. Each key, points to a value which is an array of bytes, can be a string, BLOB, XML, etc. e.g. Lamborghini is a key and can point to a value Gallardo, Aventador, Murciélago, Reventón, Diablo, Huracán, Veneno, Centenario etc.

Key-Value stores databases: Aerospike, Couchbase, Dynamo, FairCom c-treeACE, FoundationDB, HyperDex, MemcacheDB, MUMPS, Oracle NoSQL Database, OrientDB, Redis, Riak, Berkeley DB.

Key-value stores handle size well and are good at processing a constant stream of read/write operations with low latency. This makes them perfect for User preference and profile stores, Product recommendations; latest items viewed on a retailer website for driving future customer product recommendations, Ad servicing; customer shopping habits result in customized ads, coupons, etc. for each customer in real-time.

It follows the same key value pair, but it is semi structured like XML, JSON, BSON. These structures are considered as documents.

Document Based databases: Apache CouchDB, Clusterpoint, Couchbase, DocumentDB, HyperDex, IBM Domino, MarkLogic, MongoDB, OrientDB, Qizx, RethinkDB.

As document supports flexible schema, fast read write and partitioning makes it suitable for creating user databases in various services like twitter, e-commerce websites etc.

In this database, data is stored in cell grouped in column rather than rows. Columns are logically grouped into column families which can be either created during schema definition or at runtime.

These types of databases store all the cell corresponding to a column as continuous disk entry, thus making the access and search much faster.

Column Based Databases: HBase, Accumulo, Cassandra, Druid, Vertica.

It supports the huge storage and allow faster read write access over it. This makes column oriented databases suitable for storing customer behaviors in e-commerce website, financial systems like Google Finance and stock market data, Google maps etc.

It is a perfect flexible graphical representation, used unlike SQL. These types of databases easily solve address scalability problems as it contains edges and node which can be extended according to the requirements.

Graph based databases: AllegroGraph, ArangoDB, InfiniteGraph, Apache Giraph, MarkLogic, Neo4J, OrientDB, Virtuoso, Stardog.

This is basically used in Fraud detection, Real-time recommendation engines (in most cases e-commerce), Master data management (MDM), Network and IT operations, Identity and access management (IAM), etc.

HBase and Cassandra are the two famous column oriented databases. So, now talking it to a higher level, let us compare and understand the architectural and working differences between HBase and Cassandra.

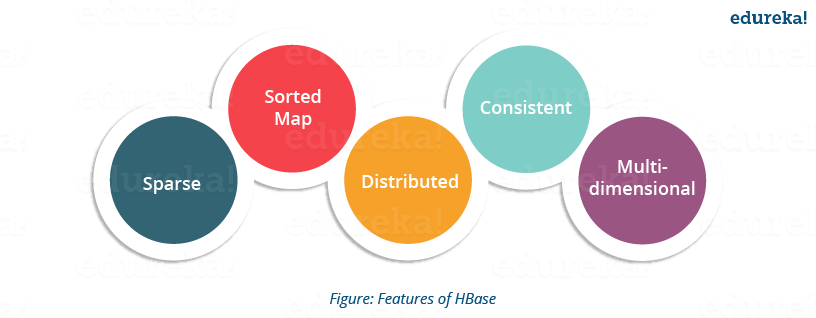

Now let’s take a deep dive and understand the features of Apache HBase which makes it so popular.

Now moving ahead in this HBase tutorial, let me tell you what are the use-cases and scenarios where HBase can be used and then, I will compare HDFS and HBase.

I would like draw your attention toward the scenarios in which the HBase is the best fit.

Before I jump to Facebook messenger case study, let me tell you what are the differences between HBase and HDFS.

HDFS is a Java based distributed file system that allows you to store large data across multiple nodes in a Hadoop cluster. So, HDFS is an underlying storage system for storing the data in the distributed environment. HDFS is a file system, whereas HBase is a database (similar as NTFS and MySQL).

As Both HDFS and HBase stores any kind of data (i.e. structured, semi-structured and unstructured) in a distributed environment so lets look at the differences between HDFS file system and HBase, a NoSQL database.

HDFS stores large data sets in a distributed environment and leverages batch processing on that data. E.g. it would help an e-commerce website to store millions of customer’s data in a distributed environment which grew over a long period of time(might be 4-5 years or more). Then it leverages batch processing over that data and analyze customer behaviors, pattern, requirements. Then the company could find out what type of product, customer purchase in which months. It helps to store archived data and execute batch processing over it.

While HBase stores data in a column oriented manner where each column is stored together so that, reading becomes faster leveraging real time processing. E.g. in a similar e-commerce environment, it stores millions of product data. So if you search for a product among millions of products, it optimizes the request and search process, producing the result immediately (or you can say in real time). The detailed HBase architectural explanation, I will be covering in my next blog.

As we know HBase is distributed over HDFS, so a combination of both gives us a great opportunity to use the benefits of both, in a tailored solution, as we are going to see in the below Facebook messenger case study.

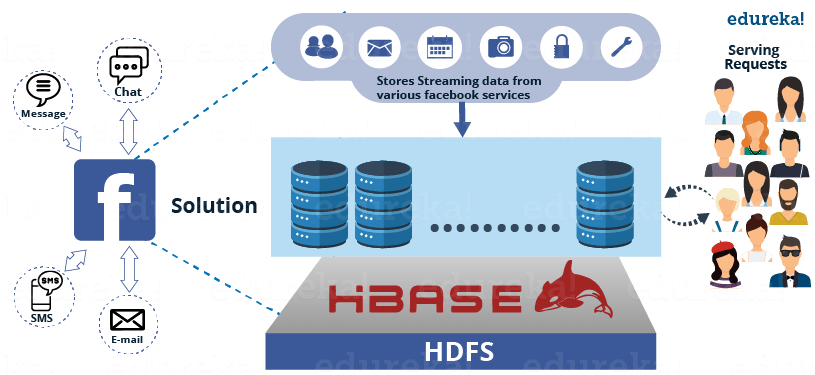

Facebook Messaging Platform shifted from Apache Cassandra to HBase in November 2010.

Facebook Messenger combines Messages, email, chat and SMS into a real-time conversation. Facebook was trying to build a scalable and robust infrastructure to handle set of these services.

At that time the message infrastructure handled over 350 million users sending over 15 billion person-to-person messages per month. The chat service supports over 300 million users who send over 120 billion messages per month.

By monitoring the usage, they found out that, two general data patterns emerged:

Facebook wanted to find a storage solution for these two usage patterns and they started investigating to find a replacement for the existing Messages infrastructure.

Earlier in 2008, they used open-source database, i.e. Cassandra, which is an eventual-consistency key-value store that was already in production serving traffic for Inbox Search. Their teams had a great knowledge in using and managing a MySQL database, so switching either of the technologies was a serious concern for them.

They spent a few weeks testing different frameworks, to evaluate the clusters of MySQL, Apache Cassandra, Apache HBase and other systems. They ultimately selected HBase.

As MySQL failed to handle the large data sets efficiently, as the indexes and data sets grew large, the performance suffered. They found Cassandra unable to handle difficult pattern to reconcile their new Messages infrastructure.

Figure: Challenges faced by Facebook messenger

For all these problems, Facebook came up with a solution i.e. HBase. Facebook adopted HBase for serving Facebook messenger, chat, email, etc. due to its various features.

HBase comes with very good scalability and performance for this workload with a simpler consistency model than Cassandra. While they found HBase to be the most suitable in terms of their requirements like auto load balancing and failover, compression support, multiple shards per server, etc.

HDFS, which is the underlying file system used by HBase also provided them several needed features such as end-to-end checksums, replication and automatic load re-balancing.

Figure: HBase as a solution to Facebook messenger

As they adopted HBase, they also focused on committing the results back to HBase itself and started working closely with the Apache community.

Since messages accept data from different sources such as SMS, chats and emails, they wrote an application server to handle all decision making for a user’s message. It interfaces with large number of other services. The attachments are stored in a Haystack (which works on HBase). They also wrote a user discovery service on top of Apache ZooKeeper which talk to other infrastructure services for friend relationships, email account verification, delivery decisions and privacy decisions.

Facebook team spent a lot of time confirming that each of these services is robust, reliable and providing good performance to handle a real-time messaging system.

I hope this HBase tutorial blog is informative and you liked it. In this blog, you got to know the basics of HBase and its features. In my next blog of Hadoop Tutorial Series, I will be explaining the architecture of HBase and working of HBase which makes it popular for fast and random read/write.

Now that you have understood the basics of HBase, check out the Hadoop training by Edureka, a trusted online learning company with a network of more than 250,000 satisfied learners spread across the globe. The Edureka Big Data Hadoop Certification Training course helps learners become expert in HDFS, Yarn, MapReduce, Pig, Hive, HBase, Oozie, Flume and Sqoop using real-time use cases on Retail, Social Media, Aviation, Tourism, Finance domain.

Got a question for us? Please mention it in the comments section and we will get back to you.

| Course Name | Date | |

|---|---|---|

| Big Data Hadoop Certification Training Course | Class Starts on 11th February,2023 11th February SAT&SUN (Weekend Batch) | View Details |

| Big Data Hadoop Certification Training Course | Class Starts on 8th April,2023 8th April SAT&SUN (Weekend Batch) | View Details |

REGISTER FOR FREE WEBINAR

REGISTER FOR FREE WEBINAR  Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

edureka.co